Ollama & LLaVA Setup

On this page

Overview

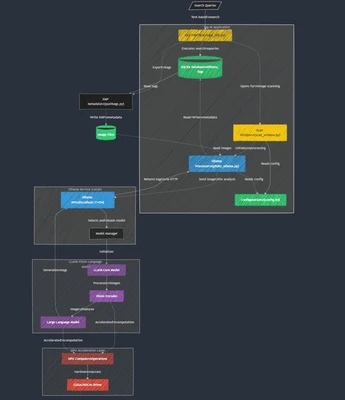

Tag-AI's local image processing relies on two key components: Ollama, a lightweight local AI server, and LLaVA, a multimodal vision-language model.

Together, these components enable completely local, private image analysis with no data sent to external services.

Automatic Setup

The Tag-AI setup wizard automatically handles Ollama and LLaVA installation:

Ollama Installation

During setup:

- Tag-AI detects if Ollama is already installed

- If not found, it downloads the appropriate installer for your OS

- The installer runs with appropriate permissions

- Tag-AI verifies Ollama is properly installed

LLaVA Model Download

After Ollama is installed:

- Tag-AI initiates the LLaVA model download

- Progress is displayed in the setup wizard

- The model is downloaded and prepared by Ollama (~4GB)

- Tag-AI verifies the model is properly installed

The automatic setup process requires internet connectivity for the initial download, but once completed, Tag-AI can process images entirely offline.

Manual Setup

If you need to manually set up Ollama and LLaVA:

Manual Ollama Installation

Windows

- Download the Ollama installer from ollama.ai

- Run the installer with administrator privileges

- Follow the installation prompts

- Verify Ollama is running in the system tray

macOS

- Download Ollama for Mac from ollama.ai

- Open the .dmg file

- Drag Ollama to the Applications folder

- Launch Ollama from Applications

- If prompted about an unidentified developer, open System Preferences → Security & Privacy and click "Open Anyway"

Linux

- Open a terminal

- Run:

curl -fsSL https://ollama.ai/install.sh | sh - Follow any additional prompts

- Verify installation with

ollama --version

Manual LLaVA Model Installation

After installing Ollama:

- Open a terminal or command prompt

- Run:

ollama pull llava - Wait for the download to complete (~4GB)

- Verify with:

ollama list(you should see llava in the list)

LLaVA Model

About LLaVA

LLaVA (Large Language and Vision Assistant) is a multimodal AI model that combines text and image understanding capabilities:

- Based on the LLaVA open source project from Microsoft

- Combines Llama 2 language model with vision capabilities

- Fine-tuned to analyze and describe image content

- Optimized for running on consumer hardware

Model Location

The LLaVA model is stored at:

- Windows:

%LOCALAPPDATA%\ollama\models - macOS:

~/.ollama/models - Linux:

~/.ollama/modelsor/usr/local/share/ollama/models

Model Versions

Tag-AI uses the standard LLaVA model by default, but Ollama offers several variants:

- llava - Standard model with good balance of speed and quality

- llava:7b - Smaller 7B parameter model for faster processing

- llava:13b - Larger 13B parameter model for better quality

- llava:34b - Largest 34B parameter model for highest quality (requires more GPU memory)

Alternative Models

Advanced users can use alternative vision-language models with Tag-AI:

Compatible Alternative Models

- bakllava - Alternative vision model with different tag styles

- cogvlm - Another vision-language model with different characteristics

- moondream - Smaller, faster model with reasonable quality

Installing Alternative Models

- Open a terminal or command prompt

- Run:

ollama pull model_name(e.g.,ollama pull bakllava) - Wait for the download to complete

Configuring Tag-AI for Alternative Models

- Open the Configuration Editor (Actions → Edit Config)

- Locate the

[tagger_ollama]section - Change

model_nameto your desired model (e.g.,bakllava) - Save the configuration

Some alternative models may produce different tag styles or quantities. Test with a small batch of images before processing your entire library.

Advanced Configuration

Ollama API Endpoint

By default, Tag-AI connects to Ollama at http://localhost:11434/api/generate. If you've

customized your Ollama setup:

- Open the Configuration Editor

- Locate the

[tagger_ollama]section - Modify the

ollama_endpointvalue - Save the configuration

Custom Ollama Configuration

For advanced Ollama setup:

- Edit

~/.ollama/config(Unix) or%LOCALAPPDATA%\ollama\config(Windows) - Set custom GPU parameters and memory limits

- Configure network settings

Model Parameters

Advanced users can modify the LLaVA prompting in the Tag-AI source code:

- photo_ollama.py - Contains the LLaVA prompt template

- IMPROVED_PROMPT variable - Controls how Tag-AI asks for image tags

Troubleshooting

Ollama Not Running

If Tag-AI can't connect to Ollama:

- Check if Ollama is running in the system tray/notification area

- If not running, launch it manually:

- Windows: Start Menu → Ollama

- macOS: Applications → Ollama

- Linux: Run

ollama servein a terminal

- Verify Ollama is listening on port 11434:

curl http://localhost:11434/api/tags

LLaVA Model Issues

If the LLaVA model isn't working:

- Check if the model is installed:

ollama list - If missing, reinstall:

ollama pull llava - If the model seems corrupted, try:

ollama rm llavafollowed byollama pull llava

Memory Issues

If you encounter "out of memory" errors:

- Close other GPU-intensive applications

- Try a smaller model variant:

ollama pull llava:7band update your configuration - Reduce image processing batch size

Slow Performance

If processing is unusually slow:

- Verify GPU acceleration is properly set up

- Check system resource usage (CPU, GPU, memory)

- Ensure you're using the appropriate model for your hardware

- Try restarting Ollama and Tag-AI

Logs and Diagnostics

For detailed troubleshooting:

- Ollama logs:

~/.ollama/logs(Unix) or%LOCALAPPDATA%\ollama\logs(Windows) - Tag-AI logs: Located in the log directory (see Database Management)

- Run

ollama run llavafrom terminal to test the model directly